Just as perfectly replicated, mass-produced plastic toys left their destruction as a child’s only possible expression of creativity, might artificial intelligence -in rendering human creativity and emotion irrelevant- destroy our connection to music?

Artificial intelligence has the potential to provide deep support at countless points in the music learning process, but without the infrastructure and workflows in place supporting freedom of emotion and identification, these opportunities are severely compromised.

If the music education industry's fascination with artificial intelligence (and a host of other digital frontiers) is anything to go by, human emotion and creativity is to muscled out of the equation. It has it's sights on our wetware and the drip-feed of generative (automat-created) music - "in harmony" with our musical tastes and preferences.

It is difficult to understate the pressing need for vastly expanded and far more data-oriented music technology platforms or stacks if the full (and above all liberating) benefits of artificial intelligence are to be felt in music learning.

Our brains -tuned over millions of years of evolution- may be superbly adapted to understand the visual world, but to leverage this capability efficiently and in the present, they need to be presented with coherent, accurate and timely learning information - in visual form.

Many for the moment rather lonely standalone applications of artificial intelligence in music (AIM) exist but they suffer both from a lack of musical context (relevance to the user's current musical source) and narrow scope (they ignore the incredible diversity of world instrumentation and supporting theory tools).

AI-assisted music apps are appearing in app stores, but these tend to focus on specific aspects of musicianship such as chord variety, often address only one instrument (guitar) and act entirely in isolation (no musical context). These are classic -and next to useless- island solutions.

The central problem lies not in artificial intelligence but in the extraordinarily narrow scope of existing learning solutions. One music system (and musical culture), one rather limited form of notation, for the most part one instrument (guitar), no theory. In that the technology to take us much further has been around for decades, it's kind of depressing. We could be so much further.

Music Visualization, Machine Learning And Artificial Intelligence

The new machine age is "digital, exponential and combinatorial" and characterised by "mind, not matter; brain not brawn; and ideas, not things" (Erik Brynjolfsson "Race with the machines").

I'm not so sure about the 'brain not brawn' bit. Humans are complex, emotional, self-aware beings. Neural networks are basically just logic cascades (algorithms) allowing decisions based on weighted evidence. These algorithms are trained to recognize which paths constitute successful outcomes.

What such an automaton effectively achieves through meticulous attention to detail, a human can still, at least in some cases, achieve through leaps of intuition. How long will it take to arrive at artificial intuition?

There is a technological threshold below which the banal can be delegated, and above which human empathy and emotional intelligence are freed. We need to raise that bar. Current music learning stacks are far too narrowly focussed: one music system (and musical culture), one rather limited form of notation, one instrument, no theory.

Much simplified, we have two choices in a musical future: either we delegate all music-making to the machine, or we develop automata that liberate us from current learning hurdles, giving free rein to our emotional intelligence and expressive capabilities.

The first path offers nothing more than highly empathetic automata equipped to replicate the finest of human motor skills, musical styles, creativity and tension. These will be indistinguishable from the real thing, and will elicit (read 'algorithmically trick') emotional responses from us.

Under the latter scenario, the automata support us in the entire learning chain, optimizing learning for any instrument configuration under any music system with any conceivable tuning - indeed being able to make constructive suggestions easing play at a host of levels. Potentially, we are talking not just of the accumulated knowledge of one instrumentalist, but the sum of knowledge across multiple world cultures. In a free-time future where real social connection (rather than digital projection), occupation for hand and mind, sufficiency and sustainability are crucial to wellbeing, which is the more desirable?

Though I have misgivings (see my closing remarks way below) about delegating too much of those things related to human wellbeing to them, kept focussed, artificial and machine intelligence have the potential to greatly enrich the online and remote music learning value chain.

We have perhaps to make a distinction here between machine learning, which is broadly understood as comprising classification, clustering, rule mining and deep learning, and artificial intelligence, comprising logic, reasoning, rule engines and the semantic web. Underlying both, however, are large collections of data. Together, they are known as 'machine intelligence' (MI).

Both have been around for a while now. There are already many open-source software libraries around for machine learning. To name just a few: Torch. Berkeley Vision released Caffe. The University of Montreal's Theano. Slowly but surely, our web browsers are being infused with artificial intelligence. Google open-sourced their TensorFlow AI engine last year, which is claimed to combine the best of Torch, Caffe and Theano. The curious can even dabble online.

Perhaps of more interest to everyman is that artificial intelligence capabilities are being brought into the browser, and in a form in which allows anyone to experiment with learning automata. Keywords here are ConvNetJS from Stanford University, the TensorFlow Playground, and the closely related TensorFire. Moreover, the related educational works are being brought online.

"Machine intelligence (MI) can do a lot of creative things; it can cope with music generation, create art from and with music, mash up existing content, reframe it to fit a new context, fill in gaps in an appropriate fashion, or generate potential solutions given a range of parameters". -Carlos E. Perez

In a musical context, they can be used to solve problems spanning from the more or less cosmetic all the way down to the logical. For example, from suggesting genre-authentic stylistic variations in play, over fingering optimization across multiple instrument configurations, down to supporting automated tool configuration processes.

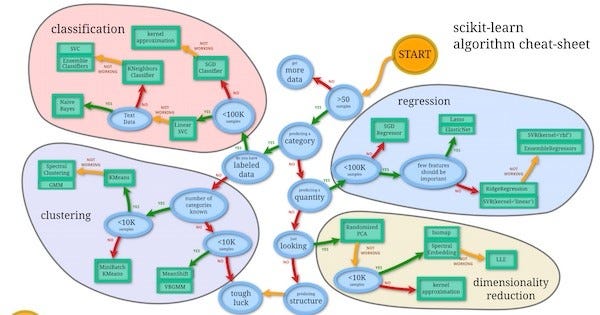

As the diagram above suggests, there are a wide range of potential applications in music, but for the moment (and especially until data handling and interrogation is consistent across the entire stack, these remain hamstrung. A general purpose, layered music intelligence engine -and especially one suited to web applications- is still a long way off.

The to my mind currently most pressing application of artificial intelligence is in the area of automatic synchronization of video, audio and notation feeds from disparate, near-but-not-quite-simultaneous sources, but whose speed may be subject to drift or offset. This applies immediately to tasks such as synchronising webcam video with microphone audio, undertaken daily by thousands of musicians around the globe.. Fully automated -and especially if open sourced- it be of huge assistance in getting P2P teaching off the ground. An approach tolerant of variation in playing speed is based on a so-called beatmap file, but still involves several manual steps.

Other applications include subtle visual interaction issues between notation, instrument models and theory tools. Tiny anticipations and delays, as music itself, can add greatly to the overall music visualization user experience.

Indeed, with it's clear focus on data, it is hoped the music aggregator platform will act as a honeypot for AI and machine intelligence solutions in online music teaching and visualization. It can, in this sense, be understood as an integration platform. Have a lead? Feel free to get in contact. ;-)

AI has the potential to solve challenges at many levels in end-to-end online and remote or P2P music learning environments. Some of these relate to constructing the platform's own artifacts, others to supporting the learner, or, indeed, a remote teacher.

Why Incorporate Artificial Intelligence?

So, keeping in mind we are talking any music system (and musical culture), any form of notation, any instrument configuration and any theory tool:

Big, brave, open-source, non-profit, community-provisioned, cross-cultural and batshit crazy. → Like, share, back-link, pin, tweet and mail. Hashtags? For the crowdfunding: #VisualFutureOfMusic. For the future live platform: #WorldMusicInstrumentsAndTheory. Or simply register as a potential crowdfunder..

So let's see how some of these might have practical and visible impact on our aggregator platform...

Away from the browser (and with it, our aggregator platform), there are further possibilities..

The above "User friendly Machine Learning Map" provides the complete flow for solving a machine learning (ML) problem.

is in the original (follow the link), click on any algorithm on the map to understand its implementation.

Finally, a cautionary word. Since the early days of the internet, we have been obliged to police the boundary between machine and man. Increasingly, both attack and policing are done by largely autonomous software built on deep learning principles. The former scours defenses for potential weaknesses, the latter monitors interaction behaviors, looking for unusual patterns. Already, our online security is passing out of our hands.

Keywords

online music learning,

online music lessons

distance music learning,

distance music lessons

remote music lessons,

remote music learning

p2p music lessons,

p2p music learning

music visualisation

music visualization

musical instrument models

interactive music instrument models

music theory tools

musical theory

p2p music interworking

p2p musical interworking

comparative musicology

ethnomusicology

world music

international music

folk music

traditional music

P2P musical interworking,

Peer-to-peer musical interworking

WebGL, Web3D,

WebVR, WebAR

Virtual Reality,

Augmented or Mixed Reality

Artificial Intelligence,

Machine Learning

Scalar Vector Graphics,

SVG

3D Cascading Style Sheets,

CSS3D

X3Dom,

XML3D

ANY: Music System ◦ Score ◦ Voice ◦ Instrument Or Theory Tool Config ◦

World Music's DIVERSITY and Data Visualisation's EXPRESSIVE POWER collide. A galaxy of INTERACTIVE, SCORE-DRIVEN instrument model and theory tool animations is born.

Entirely Graphical Toolset Supporting World Music Teaching & Learning Via Video Chat ◦ Paradigm Change ◦ Music Visualization Greenfield ◦ Crowd Funding In Ramp-Up ◦ Please Share

Featured Post

All Posts (*** = Recap)

- User Environment - Preferences and Population

- Comparative Musicology & Ethnomusicology

- Music Visualisation - a Roadmap to Virtuosity ***

- A Musical Instrument Web Repository

- Toolset Supported Learning Via Video Chat

- Key Platform Features ***

- Platform Aspirations

- Aggregator Platform vs Soundslice

- Music, Machine Learning & Artificial Intelligence

- World Music Visualisations And The 3D Web

- Cultivating Theory Tool Diversity

- Cultivating Instrumental Diversity

- Cultivating Notation Diversity

- Cultivating Music System Diversity

- One URL To Animate Them All And In The Browser Bind Them ***

- Music Visualisation: Platform Overview

- Music Visualisation: Challenges

- Music Visualisation: The Key To Structure

- Music Visualisation: Motivation ***

- Music Visualisation: Key Concepts ***

- Music Visualisation: Social Value

- Music Visualisation: Prototype

- Music Visualisation: Catalysts

- Music Visualisation: Platform Principles

- Music Visualisation: Here Be Dragons

- Music Visualisation: Potential

- Music Visualisation: The Experimental Edge

- Music Visualisation: Business Models

- Music Visualisation: Technical Details

- Music Visualisation: (Anti-)Social Environment

- Music Visualisation: Arts, Crafts, Heritage

- Music Visualisation: Politics

- Music Visualisation: Benefits ***

- PROJECT SEEKS SPONSORS

- Consistent Colour Propagation

- Orientation: Genre-Specific Workflows

- P2P Teacher-Student Controls

- Musical Modelling

- Social Media Integration

- Platform Provisioning In Overview

- Generic to Specific Modelling: A Closer Look

- Notation, Standardisation and Web

- Communication: Emotional vs Technical

- Musical Storytelling

- Product-Market Fit

- Monthly Challenge

- World Music Visualisations and Virtual Reality

- World Music Visualisations: Paradigm Change?

- How Does It All Work Together?

- Off-the-Shelf Animations: Musical Uses

- MVC vs Data Driven

- A Reusable Visualisation Framework

- What IS Music Visualisation?

- Questions Music Visualisations Might Answer

- Google Group for World Music Visualisation

- Instrument Visualization: Fingering Controls

- Static, Active, Interactive, Timeline-Interactive

- Visualising Instrument Evolution

- Unique Social Value (USV)

- Instrument Modelling: Lute Family

- Person-to-Person ('P2P') Music Teaching

- Alternative Music Visualizer Scenarios

- Music Visualisation: Why a platform, not just an app?

- Music, Dance and Identity: Empowerment vs Exploitation

- Why No Classification System For Music Theory?

- Musical Heritage, Copyright and Culture

- Visualisation Aggregator Platform - Musical Immersion

- Instrument Models - Complete Configuration Freedom

- Diversity is Health, Contentment is Wealth

- Politics & Provisioning Mechanisms of World Music Learning

- Technology, Tribes, Future and Fallout

- Rule-Based vs Data-Driven Notation

© Copyright 2015 The Visual Future of Music. Designed by Bloggertheme9 | Distributed By Gooyaabi Templates. Powered by Blogger.

2 comments

Write commentsInteresting stuff to read. Keep it up.

ReplyMachine Learning Solutions Provider

Music Visualization, Machine Learning And Artificial Intelligence. - The Visual Future Of Music >>>>> Download Now

Reply>>>>> Download Full

Music Visualization, Machine Learning And Artificial Intelligence. - The Visual Future Of Music >>>>> Download LINK

>>>>> Download Now

Music Visualization, Machine Learning And Artificial Intelligence. - The Visual Future Of Music >>>>> Download Full

>>>>> Download LINK xo

Comments, questions and (especially) critique welcome.